Next: 3D Engine Principles Up: research_paper Previous: Random Terrain Generation

(Until I make it less subjective, this section definitely doesn't belong in a research paper)

The Evolution of CG

Computer graphics has grown in complexity over the decades. Once only capable of drawing only lines and boxes, computers are now able to draw complex scenes of near photorealistic quality, thanks mostly to Moore's law.

The theories for CG have been devised and known since the 1960s. The first 3d graphics libraries were mostly proprietary tools developed by universities and companies such as Silicon Graphics. Then in the late 1980s, several standardized graphics library came into being.

RenderMan

The movie industry was one of the first major users of computer graphics. RenderMan is a high level graphics language that was originally designed to produce extremely complicated and photorealistic effects in movies. Practically every movie special effect has used RenderMan in some way (here's a list https://renderman.pixar.com/products/whatsrenderman/movies.html).

The liquid metal T1000 in Terminator 2 was a landmark in CG.

Sulley in Monsters Inc. is modeled with roughly two million computer generated hairs.

RenderMan is a minimal graphics language compared to many of today's graphics libraries. There is no concept of rendering details such as depth buffers or multi-texturing nor are there any limits such as the maximum number of lights. Instead, Renderman's most powerful features are its shaders. RenderMan shaders are essentially C functions that give CG artists endless user programmability in drawing things. Instead of implementing fixed function lighting functions or texture combining modes, all rendering effects are implemented as shaders. As a result, RenderMan is very general purpose and has changed little in twenty years and continues to meet the requirements of today's CG industry.

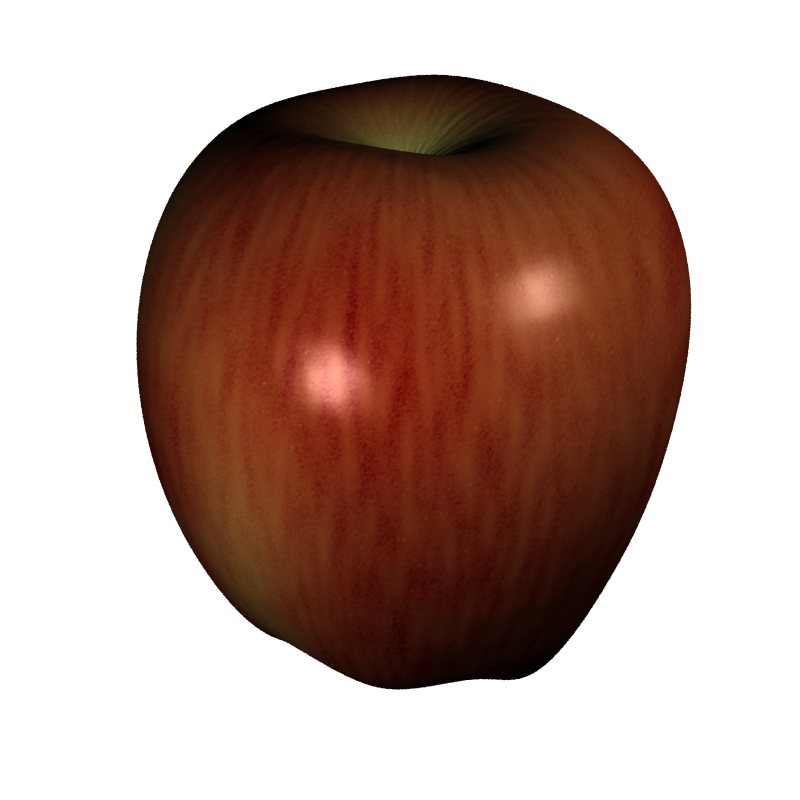

The following is an example of a shader which is used to draw an apple. Do not be mistaken to think that texture mapping was used. The entire surface of the apple except the geometry data is defined by this surface shader:

Despite popular belief, RenderMan is not a ray tracer (it is implementation dependent). Pixar's PRMan implementation uses the REYES (Realistically Everything You Ever Saw) algorithm developed at Lucas Film's Industrial Light and Magic division (the CG branch of ILM eventually split and became Pixar in 1986) which draws the scene in a fraction of the time it takes to do ray tracing. Even with this algorithm, drawing RenderMan scenes takes mammoth amounts of computer power. Toy Story, the first completely computer generated film took 800,000 hours of computer time ( 50 hours real time) just to produce less than two hours of actual film. Industrial Light and Magic had pioneered the use of renderfarms, networks of hundreds or thousands of computers working on the drawing process, and currently has the second most amount of computing power in the world after the Defense Department.

OpenGL

First introduced in 1992 by Silicon Graphics, OpenGL has been widely used for high performance applications such as CAD and for scientific visualization as well as in interactive games. Unlike RenderMan, OpenGL is a more low level graphics language.

OpenGL lacks the user programmability of RenderMan but makes up for it in speed. OpenGL is able to draw in real time unlike RenderMan because OpenGL operations such as drawing a triangle or texture mapping can be done blazingly fast using specialized hardware.

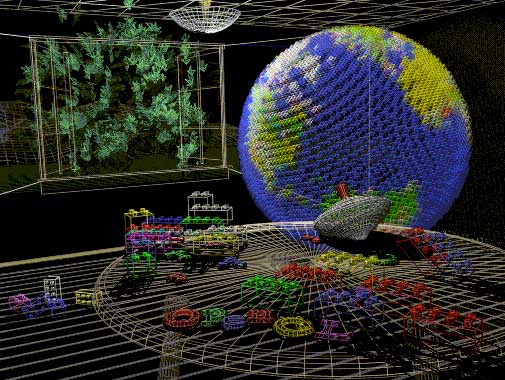

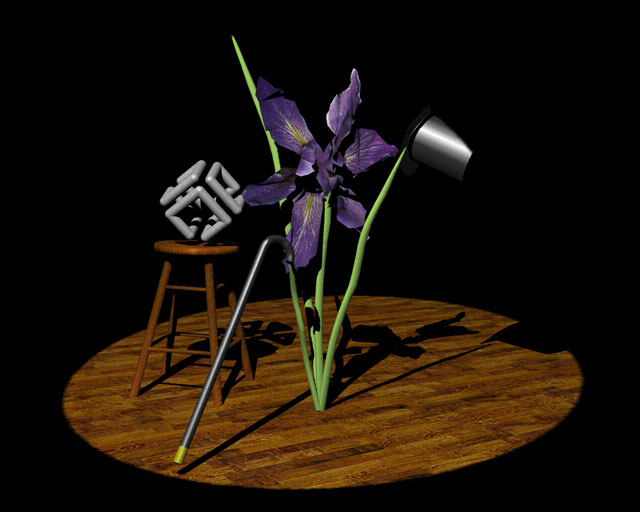

The following are images plaques and descriptions taken from the OpenGL Red Book (the image quality might less than optimal because the images were scanned from the book).

A scene with objects rendered as wireframe models

The same scene with textures maps and shadows added

A dramatically lit and shadowed scene, with most of the surfaces textured. The iris is a polygonal model.

Direct3D vs. OpenGL

No 3D graphics discussion is complete without mentioning Direct3D. In my opinion, Direct3D and DirectX is the epitomy of Microsoft's desire to reinvent the wheel. Basically, Microsoft realized that Windows 95 wasn't suitable as gaming platform, so they decided to add APIs to make Windows a viable gaming platform. Just like how they ripped off Word from Word Perfect and Internet Explorer from NCSA Mosaic, they bought the rights to Rendermorphics, a 3D graphics library made by a British company. As always before adopting anything not theirs, it had to be "improved".

The first releases of Direct3D were barely workable. It was a pain to program with because of the use of low level structs. OpenGL was superior in almost every way. DirectX 6 was the first truly workable version and every succeeding version made marginal improvements. As of right now, DirectX 9 has evolved to a point that it's 3D API is esesentially the same as OpenGL. However, each version of DirectX since 6.0 is incompatible with earlier versions causing the need to have multiple run time support for each version. OpenGL, on the other hand, has always been backward compatible since version 1.0 and is supported on almost every computer platform there is; DirectX only is supported on Windows. However, Microsoft evangelists claim that OpenGL is only theoretically portable and that DirectX is only theoretically not portable.

Direct3D does have an advantage over OpenGL in that it is more object oriented (you specify which context you want to render to) and has much better support for video hardware extensions. Game makers are even starting to stop supporting OpenGL renderers in favor of Direct3D ones (i.e. Half-Life 2) - Microsoft evengilists at work?

The Future

The current trend in CG today is the increasing emphasis on both user programmability and speed. RenderMan graphics might be very generic, but is not at all suitable for drawing interactive environments. Lower level graphics languages such as OpenGL offer real-time rendering but consist of mostly of a fixed function rendering pipeline. New shading languages such as NVIDIA's Cg, OpenGL shading language, and Direct3D's HLSL are all attempts to give more programmability to low level graphics libraries by introducing shaders that are able to run at interactive rates by using dedicated video hardware.

Sources

<http://www.geocities.com/CollegePark/5323/1980.htm>

<http://www.accad.ohio-state.edu/ waynec/history/ti>

<http://www.accad.ohio-state.edu/ waynec/history/timeline.html>

Ye Zhang 2004-06-07