- C. Bauer Archival of Articles via RSS and Datamining Performed on Stored Articles

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (1st Period)

Poster (1st Period)

- S. Ditmore Construction and Application of a Pentuim II Beowulf Cluster

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

(Poster) (1st Period)

(Poster) (1st Period)

- M. Druker NO PAPER - MARCH 2005

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (1st Period)

Poster (1st Period)

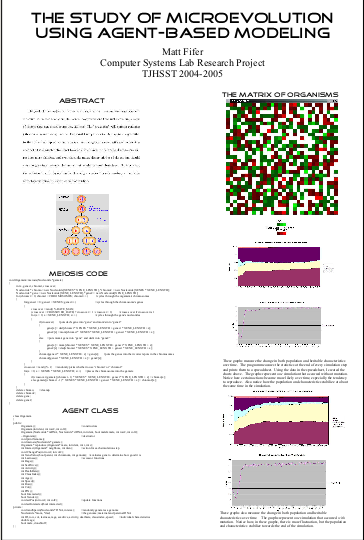

- M. Fifer A Study of Microevolution Using Agent Based Modeling

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (need PDF) (1st Period)

Poster (need PDF) (1st Period)

- J. Ji Natural Language Processing: Using Machine Translation in the Creation of a German-English Translator (1st Period)

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (need PDF)

Presentation

Poster (need PDF)

Presentation

- A. Kim A Study of Balanced Search Trees: Brainstorming a New Search Tree (1st period)

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

- J. Livingston KDUAL Kernel Debugging User-Space API Library (proposal)

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

- Jack McKay An Analysis of the Use of Sabermetric Statistics in Baseball (need PDF) (1st period)

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

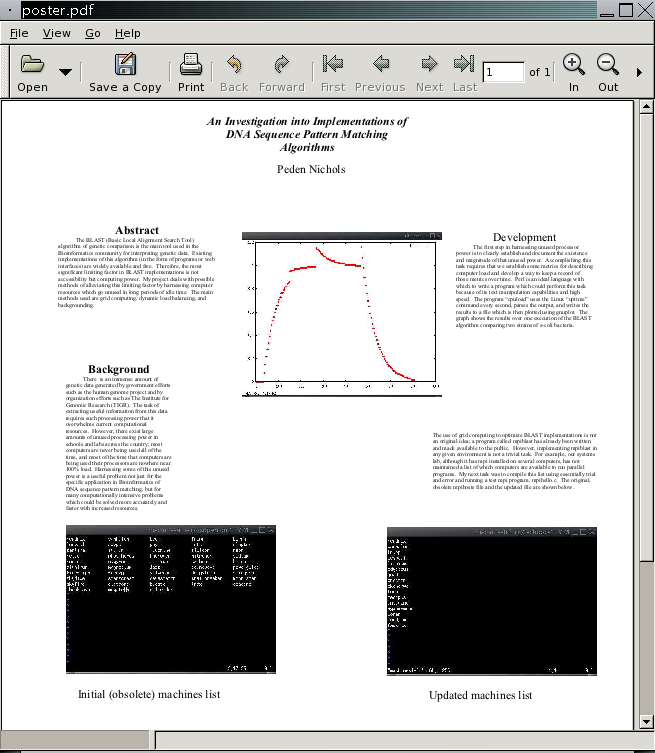

- P. Nichols

An Investigation into Implementations of DNA Sequence Pattern Matching Algorithms (1st period)

(html)

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

- R. Staubs Part-of-Speech Tagging with Limited Training Corpora (1st period)

- Problem Statement:

How well does part-of-speech tagging perform using a very limited general corpus?

How well does it do using specific-genre corpora? Do other methods improve on the standard? - Method for solving the problem:

Training data consists of: tags represented in the corpus,

words represented in the corpus, transitions represented in the corpus, and the frequency of each.

Words and tags are read in from the corpus and stored alphabetically or in parallel in a series of arrays and matrices.

This data is extracted and used to create the probabilistic representation of a Markov Model, which is used for the tagging of words. - Sample result(s), finished product:

Final results have not yet been attained, but when they are

they will be charts of correct taggings generally and for each type of tag for each method of tagging. - Research:

Many different methods of POS tagging have been advanced in the past but no attempts give hope of "perfect" tagging at the current stage. Accuracy of over 90% on ambiguous words is typical for most methods in current use, often well exceeding that. POS taggers cannot at the current time mimic human methods for distinguishing part of speech in language use. Work to get taggers to approach the problem from all the expected human methods - semantic prediction, syntactic prediction, lexical frequency, and syntactical category frequency being the most prominent - have not yet reached full fruition. The problem of corpus size has also not been approached much recently. In the past limited corpora were all that could be used, but now there are enormous ones which are more the norm. Finding out how modern methods work on restricted-size corpora is useful if taggers are ever to be expected to do their own training or for other issues in application.

- Problem Statement:

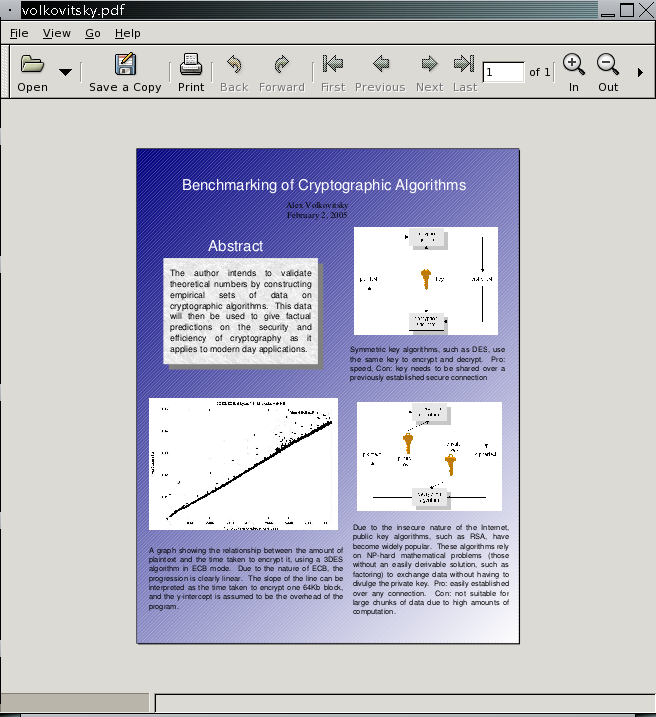

- Alex Volkovitsky Benchmarking of Cryptographic Algorithms, Poster - need PDF (1st Period)

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

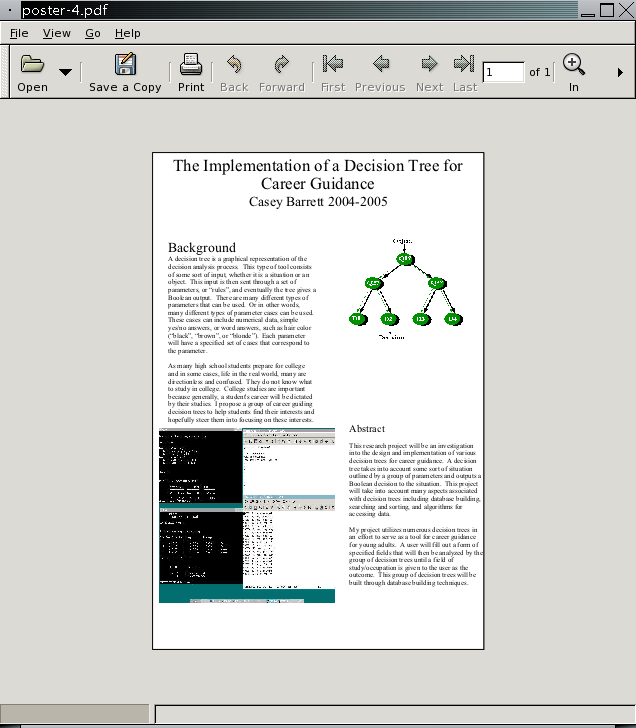

- C. Barrett

Implementation of a Decision Tree for Career Guidance (2nd Period)

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (2nd Period)

Poster (2nd Period)

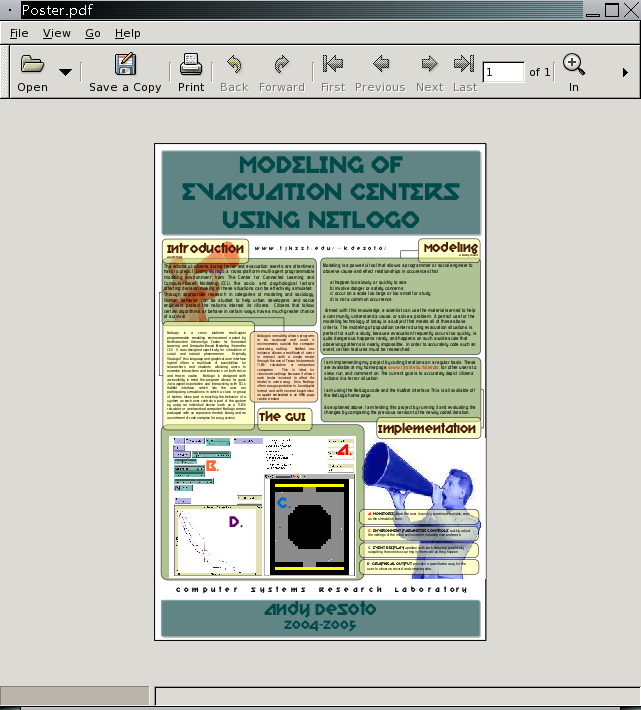

- A. Desoto Modeling Evacuation of Population Centers Using NetLogo (2nd period)

- Problem Statement: This project uses the NetLogo code to simulate evacuation of a user-defined 'danger area' with elements such as walls and collisions, event timing, and agent-based intelligence. The user initializes the desired environment, altering the presets if desired, chooses the behavior of the citizens, then runs the applet. Appropriate information is monitored and graphed in the GUI.

- Method for solving the problem: I am implementing my project by coding iterations on a regular basis. These are available at my home page, www.tjhsst.edu/kdesoto, for other users to view, run, and comment on. The current goal is to accurately depict citizens' actions in a terror situation. I am using the NetLogo code and the HubNet interface. This is all available off the NetLogo home page. As explained above, I am testing this project by running it and evaluating the changes by comparing the previous version to the newly-coded iteration.

- Sample result(s), finished product: environment created by Northwestern University's Center for Connected Learning and Computer-Based Modeling (hereafter CCL). It was designed specifically for simulation of social and natural phenomenon. Originally StarLogoT, this language and graphical user interface hybrid offers a multitude of possibilities for researchers and students, allowing users to examine interactions and behaviors on both micro and macro scales. NetLogo is designed with accessibility in mind; the program allows for quick Java applet exportation and interactivity with CCL's HubNet interface, which lets the user run participatory simulations in which a class or group of testers takes part in enacting the behavior of a system as each user controls a part of the system by using an individual device (such as a TI-83+ calculator or a networked computer). NetLogo comes packaged with an expansive models library and an assortment of code samples for easy access. This project was set up to determine the most effective ways to get large numbers of citizens to safety in times of danger. In order to determine how to do this, certain things must be determined. First, the parameters that add to the dangerousness of the environment should be measured. Secondly, once the situations of greatest danger are found, the most effective intelligences to escape this danger must be poinpointed as well. Using the environment instantiated in the setup (see subsection "The Environment"), different situations are tested.

- K. Diggs Optimizing Genetic Algorithms for use in Cyphers (2nd period)

- Problem Statement:

The project is formally titled "Optimizing Genetic Algorithms for Use in Cyphers". The poster shares this title. There is a section on the poster titled "Background" that contains generation5's definition of the problem, (found at www.generation5.org/content/2003/beale.asp) which is the Beale cypher, a set of three documents that contain numbers that correspond to alphabetical characters that form a message describing the location of a buried treasure. The poster also provides a reference, as does this report, as to where the language describing the problem was attained from.

- Method for solving the problem:

The poster has two sections dealing with how the project attempts to solve the problem mentioned in its "Background" section, both of which I will enumerate and describe in this section. There is one paragraph devoted entirely to the heuristic algorithm used in the problem, which is what adapts the general concept of the genetic algorithm to the specific problem. It mentions the two-pronged approach to identifying accurate translations of the cypher, which includes a relative character-frequency analysis and a word search.

- Sample result(s), finished product:

There is no mention in the current version of the poster of the project results since the project is still in progress. I do have a screenshot of the program generating a chromosome, using it to translate the cypher, and then analyzing the character frequencies of the translation relative to that of normal English and coming up with a variance score (lower is better). I can opt to put this into the poster if need be, although the poster looks pretty crowded already (I would have to reduce the size of the text to insert the screenshot). No results can be shown for the word search since the program currently produces a litany of errors during the compiling process when attempted with the word search written in.

- Research

The project abstract, which is reproduced in the poster, mentions the usefulness of genetic algorithms that I have learned about through looking at other GA-based research projects in this class -- I could replace it with an explicit reference, but I think the project abstract is more fitting since it is more meaningful to a passerby viewing the poster who wants to learn about the project. Once the word\-- search function is complete, I will also be able to compare the effectiveness of using different parameters for the heuristic and describe the results acquired from this research in the poster.

- Problem Statement:

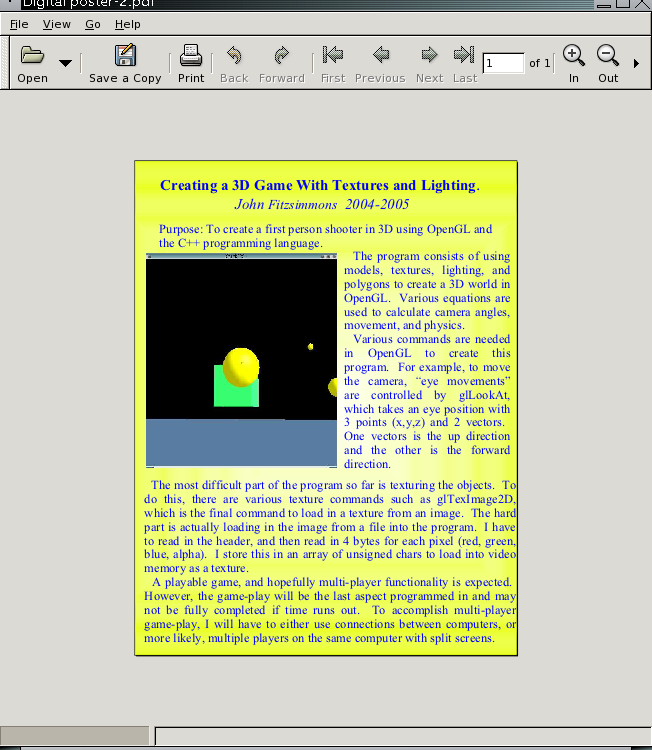

- J. Fitzsimmons Creating a 3D Game with Textures and Lighting

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (2nd Period)

Poster (2nd Period)

- C. Goss Paintball Frenzy: Turn-based Graphical Game with an Optimized Minimax AI Agent

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (2nd Period)

Poster (2nd Period)

- S. Hilber Modeling a Simulation of Evolutionary Behavior (2nd period)

- Problem Statement:

With the creation of Epstein and Axtell's Sugarscape environment, increasing emphasis has been placed on the creation of "root" agents - agents that can each independently act and interact to establish patterns identifiable in our everyday world. Models created for traffic patterns and flocking patterns confirm that these conditions are caused by each participating agent trying to achieve the best possible outcome for itself. The purpose of this project is to attempt to model evolutionary behavior in agents in an environment by introducing traits and characteristics that change with the different generations of agents. Using the modeling package MASON programmed in Java, I will be able to create an environment where agents will pass down their genetic traits through different generations. By adding certain behavioral traits and a common resource to the agents, I hope to create an environment where certain agents will prosper and reproduce while others will have traits that negatively affect their performance. In the end, a single basic agent will evolve into numerous subspecies of the original agent and demonstrate evolutionary behavior. This project will show that agents which possess the capability to change will change to better fit their environment.

- Method for solving the problem:

Conway's Game of Life was the first prominent agent-based model. Each cell was an "agent" that contained either a 0 or a 1 (alive or dead) depending on how many neighbors it had, and acted independently of the environment. Conway's Game of Life didn't lead to any profound insights, but it did pave the way for future agent-based modeling. The advantage of agent-based modeling, as many found out, is that it did not assume prior conditions. It was a method of building worlds "form the bottom up", where independent agents were able to create complex worlds without any overseers. One popular psychological game, Prisoner's Dilemma, spawned a series of games where agents tried t maximize their outcome, often at the expense of other agents. Eventually, these agent-based models were incorporated in studies of flocking. The models created to show flocking behavior in birds did not incorporate flock leaders, as many presumed. Instead, the birds all acted for their own best interests, and directions and resting points were chosen as compromises of sorts. Using the theory that independent agents can create organized structures such as flocks, Epstein and Axtell created the Sugarscape world in an effort to discover if social behaviors and human characteristics could emerge through independent actions. The Sugarscape model had agents able to breed, fight, trade, and die, and the core of the model was the resource sugar. Each agent had a metabolism rate which burned off its sugar; if it ran out of sugar, the agent died. Instead of isolated behavior, however, the agents soon used their resources to work together. Agents shared sugar, sent "scouts" to gather sugar for the benefit of all, engaged in wars, and in general performed a startlingly large amount of human behavioral characteristics. When spice, a second resource with its own metabolism rate, was introduced into the world of Sugarscape, trade emerges as agents tried to meet their needs as best they could - and tried to get the best deal as a result. These behaviors are surprising, but ultimately show the value of agent-based modeling and the useful insight it can provide.

- Sample result(s), finished product:

In order to simulate evolutionary behavior in an agent-based system, the agents need to simulate the real world as much as possible. In actual evolution (as described in computer science terms), two agents of opposing sex combine their genetic information at random to generate offspring with half of each parent's traits. Genetic mutations also happen at random, causing new traits that neither parent had in their genetic code. This gradual evolution creates swarms of different agents, and those agents that are best suited for their environments will be best able to survive and reproduce. Of course, several different portions of the environment could be home to different "breeds" of agents, and these different breeds could live alongside each other in separate societies. It is this phenomenon that this project is trying to recreate. By closely following the rules of genetics, the project should be able to show several different breeds of agents thriving, having only been created by a single agent type. Instead of passing on dominant and recessive genes, however, this project opts for a higher-level approach by using characteristics such as extroversion as the "genetic currency". While the human genome has tens of thousands of genes to determine these characteristics, such attention to detail is impossible and unnecessary for this project. By changing characteristics such as cooperation and extroversion on a slider, agent's traits will gradually change as they are passed down from generation to generation. This effectively simulates actual genetic activity, and is thus effective for this project. Agents will breed, die, and interact, eventually changing the genetic code of their societies to suit their needs. Credit goes to Conway for The Game of Life, Epstein and Axtell for Sugarscape, the MASON team for developing MASON, and Dr. John A. Johnson for the IPIP-NEO.

- Problem Statement:

- A. Li Techniques of Asymmetric File Encryption

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (2nd Period)

Poster (2nd Period)

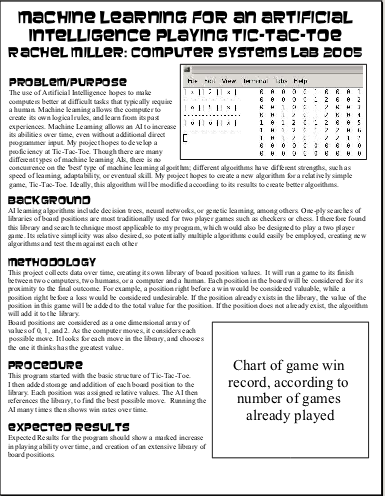

- R. Miller Use of Machine Learning to Develop Artificial Intelligence for Playing Tic-Tac-Toe (2nd period)

- Problem Statement:

To develop a machine learning algorithm to play Tic-Tac-Toe, so that its playing ability will

increase with experience. - Method for solving the problem:

This project collects data over time, creating its own library of board position values. It will run a game to its finish between two computers, two humans, or a computer and a human. Each position in the board will be considered for its proximity to the final outcome. For example, a position right before a win would be considered valuable, while a position right before a loss would be considered undesirable. If the position already exists in the library, the value of the position in this game will be added to the total value for the position. If the position does not already exist, the algorithm will add it to the library. Board positions are considered as a one dimensional array of values of 0, 1, and 2. As the computer moves, it considers each possible move. It looks for each move in the library, and chooses the one it thinks has the greatest value.

- Sample result(s), finished product:

Results will show the win rate of the algorithm for each progressive set of 50 games.

For example, one outcome might be winning 23 games out of games 1-50, 27 games out of games 51-100,

and then 30 games out of games 101-150. These results could be plotted to show a definite progression over time. - Research background:

AI learning algorithms include decision trees, neural networks, or genetic learning, among others. One-ply searches of libraries of board positions are most traditionally used for two player games such as checkers or chess. I therefore found this library and search technique most applicable to my program, which would also be designed to play a two player game. Its relative simplicity was also desired, so potentially multiple algorithms could easily be employed, creating new algorithms and test them against each other.

- Problem Statement:

- Kyle Moffett KDUAL Research NO POSTER!

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (2nd Period)

Poster (2nd Period)

- Jason Pan

Developing an AI for "Guess Who"

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster need PDF

Poster need PDF

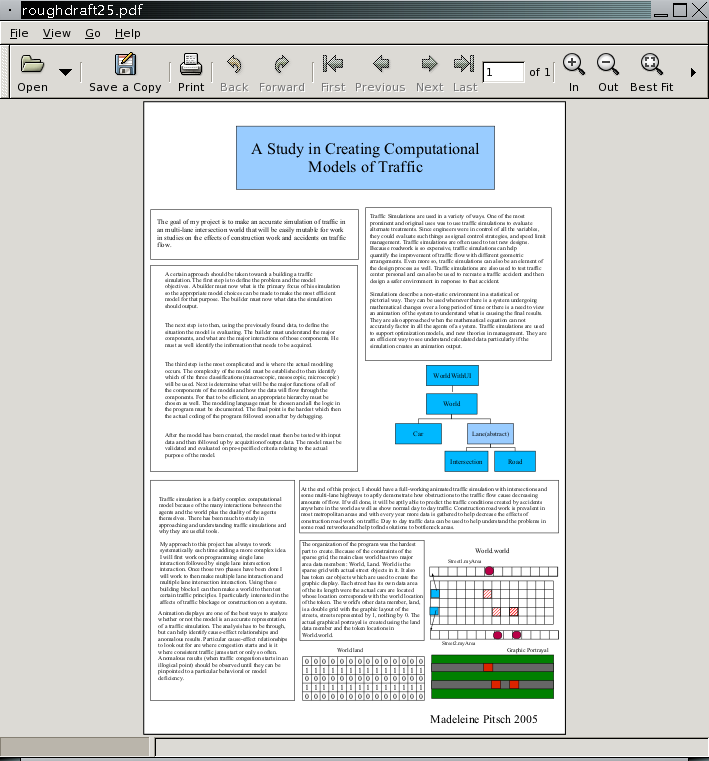

- M. Pitsch Computational Models of Traffic Flow (2nd period)

- Problem Statement:

I wish develop more understanding about the effects of lane changing and acceleration on traffic flow. - Method for solving the problem:

I am creating a traffic simulation that has cars change lanes depending on how far they are from cars in front of them,

how fast the cars are going in front of them, and the speed of the cars in the lane over. - Sample result(s), finished product:

3.I have had various runs of the project but am continuing to refine the car's method of choosing to change their lanes. - Research:

I have been learning about the criteria for making a traffic simulation as well as techniques in organization of that traffic simulation.

Version to print:

- Problem Statement:

- T. WismerKernel Debugging User-space API Library (KDUAL),

(html)

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (2nd period)

Poster (2nd period)

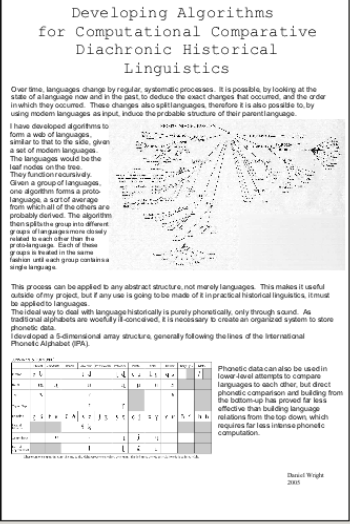

- D. Wright Developing Algorithms for Computational Comparative Diachronic Historical Linguistics (2nd period)

- Problem Statement:

1. Problem Statement: To find and organize the links between languages separated chronologically and geographically,

and to analyze the nature of these links, both for other uses and to further assist in the organization of the languages. - Method for solving the problem:

Methods: Algorithms to find the relations of derivation between languages by forming a "family tree."

This utilizes a recursive algorithm that splits a group of languages into families based around core languages,

and then split these families and then the families within them and so on until there is a complete tree of languages.

Then, from phonetic data about these languages, find the direct sound changes between them, by correspondence building algorithms.

Then, these changes could possibly be used in reorganizing the groups, though this is not necessary. - Sample result(s), finished product:

The big tree on the front of the paper is an example of a finished product. My product will not actually

look that fancy, but conceptually it will be the same. - Research:

Phonetics, mathematics of group theory, graph theory.

- Problem Statement:

- R. Brady Experimentation on the Development of a Learning Agent Given Rules to a Game (3rd period)

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (pdf)

Poster html poster (3rd Period)

Poster (pdf)

Poster html poster (3rd Period)

- B. Bredehoft Robot World: An Evolution Simulation

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (3rd period),

alternate poster

Poster (3rd period),

alternate poster

- Michael Feinberg The Comparison of Various Artificial Intelligence Types of Various Strengths (need PDF)

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (need PDF) (3rd Period)

Poster (need PDF) (3rd Period)

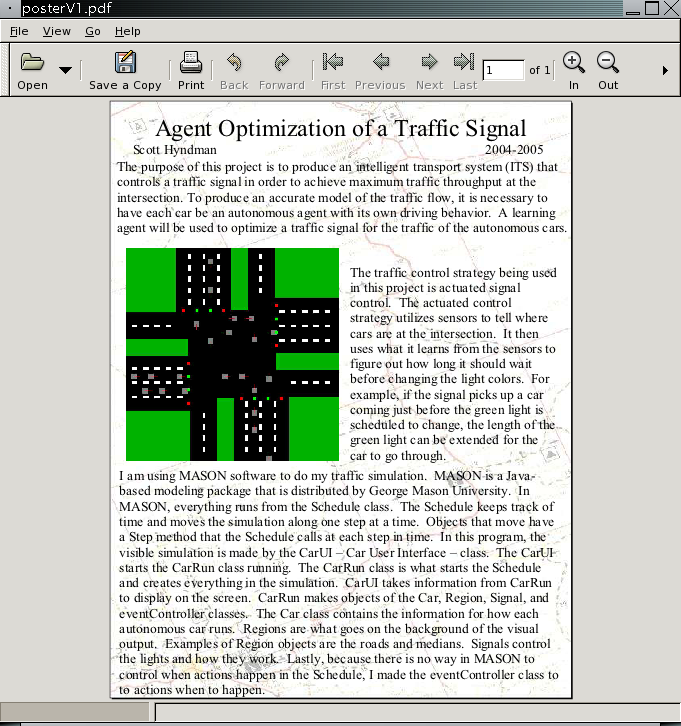

- S. Hyndman Agent Modeling and Optimization of A Traffic Signal (3rd period)

- Problem Statement:

Traffic in the Washington, D.C. area is known to be some of the worst traffic in the nation. Optimizing traffic signal changes at intersections would help traffic on our roads flow better. There are two goals in this project. The first is to create a computer model of a traffic signal. The second goal is to produce an intelligent transport system (ITS) that controls a traffic signal in order to achieve maximum traffic throughput at an intersection.

- Method for solving the problem:

The simulation of a traffic signal is created using the MASON java library. MASON is a library of classes created by George Mason University for use in computer modeling. The ITS is created using reinforcement machine learning. The traffic signals are controlled by an agent that monitors the intersection and decides what should be done in order to optimize traffic flow.

- Sample result(s), finished product:

A sample of the simulation can be found at http://www.tjhsst.edu/~shyndman/research/carrun.png. At this time there is no result for the ITS. Sample runs of the simulation.

There are three main traffic signal control strategies: pretimed control, actuated control, and adaptive control. * Pretimed Control: Pretimed control is the most basic of the three strategies. In the pretimed control strategy, the lights changed based on fixed time values. The values are chosen based on data concerning previous traffic flow through the intersection. This control strategy operates the same no matter what the traffic volume is. * Actuated Control: The actuated control strategy utilizes sensors to tell where cars are at the intersection. It then uses what it learns from the sensors to figure out how long it should wait before changing the light colors. For example, if the signal picks up a car coming just before the green light is scheuled to change, the length of the green light can be extended for the car to go through. * Adaptive Control: The adaptive control strategy is similar to the actuated control strategy. It differs in that it can change more parameters than just the light interval length. Adaptive control estimates what the intersection will be like based on data from a long way up the road. For example, if the signal notices that there is a lot of traffic building up down the road during rush hour, it might lengthen the green light intervals on the main road and shorten them on the smaller road.

- Problem Statement:

- G. Maslov

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (3rd Period)

Poster (3rd Period)

- E. Mesh

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (3rd Period)

Poster (3rd Period)

- T. Mildorf Assessment of Sorting Parts By Variable Slot Width,

(also see sample graphics) (3rd period),

Conspectus

- Problem Statement:

1. Develop a computational model of the following scenario: A right rectangular prism is released from rest on an inclined plane with its edges paral lel to those of the plane with uniform friction between the plane and prism. As the prism slides over the edge of the plane, it acquires a certain angular velocity that it maintains through free-fal l until it impacts, with constant elasticity E (A variable assuming values between 0 and 1 representing a linear transformation between inelastic and elastic collisions in terms of energy) a whole number of times with a slot consisting of two fixed rectangular prisms. 2. Run this model hundreds of thousands of times with deviations in the dimensions of the prism and build a graphical representation of the outcomes - the prism being either accepted or rejected - as a large bitmap image. 3. Analyze the results and comment intelligently on whether it is feasible to sort by this methodolgy.

- Method for solving the problem:

1. Develop theoretical equations to describe the motion of the prism. 2. Transform the continuous system into a discrete construct by assuming fixed variables throughout small time steps dt and implement the resulting difference equations. 3. Improve computational efficiency via further approximation schemes such as look-up tables for trig variables. 4. Catalogue and analyze the results of many iterations of the experiment. Accomplished by writing results to a file and then to an OpenGL window. Finally, observing general trends in the color coordinated image.

- Sample result(s), finished product:

1. Data such as the following table has been collected, a larger run of the program (512,000 cases) is underway. 2. The conclusion section of the research paper will be drafted later this week. ELASTICITY=0.9 SLOTFLUX=50 SLOTINITIAL=50 DIMFLUX=100 0000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000 0000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000 0000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000 0000000000000000111100000000000000000000000000000000000000000000000000000000000000000000000000000000 0000000000001000111100000000000000000000000000000000000000000000000000000000000000000000000000000000 ... 1111111111111110001000000000000000000001110000000000000000000000000000000000000000000000000000000000 1111111111111110011000000000100000000001110000000000000000000000000000000000000000000000000000000000 1111111111113110001100000000000100000001110000000000000000000000000000000000000000000000000000000000 111111111111111100110000000000000000000111000010 0000000000000000000000000000000000000000000000000000

- Research: Tipler Physics.

- Problem Statement:

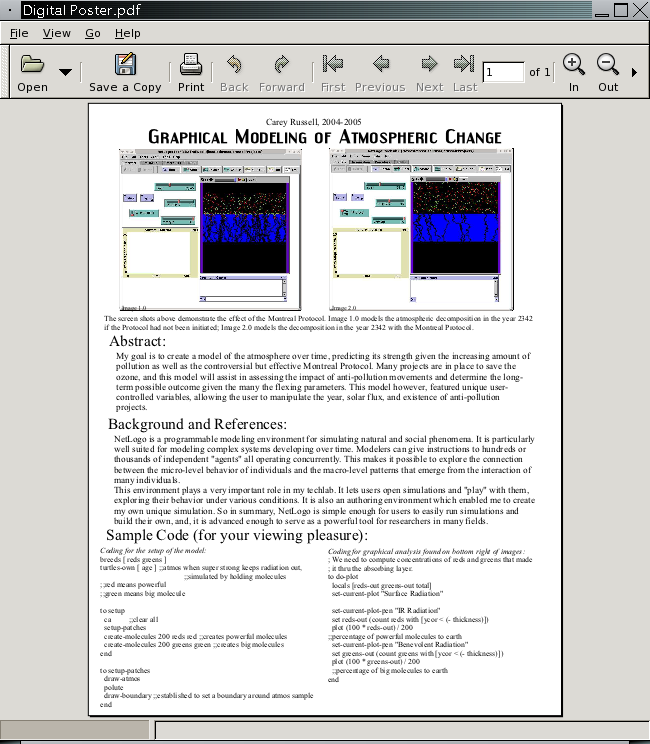

- C. Russell Graphical Modeling of Atmospheric Change

- Problem Statement and Method for solving the problem:

Ozone is both beneficial and harmful. Close to earth, the ozone that forms as a result of chemical reaction may cause a number of repiratory problems, particularly for young children. (These 'chemical reactions' include traffic pollution and heat energy from sunlight.) Although this may be the primary media representation of the ozone, high up in the atmosphere, the ozone filters radiation from the sun. This area, called the stratosphere, protects the eath from the cell-damaging ultraviolet range of the electromagnetic spectrum. Essentially, this layer is preserving life as we know it. Protecting the ozone layer is essential. Ultraviolet radiation from the Sun can cause a myriad of health problems, including skin cancer, eye cataracts, and a reduction in the body's natural immune system. Furthermore, ultraviolet radiation can be damaging to microscopic life in the surface oceans which, although often overlooked, forms the basis of the world's marine food chain, crops like rice and soya, and polymers used in paint and clothing. Additionally, a loss of ozone in the stratosphere may even cause a drastic change in global climate. The concentration of ozone (03) in the stratosphere fluctuate naturally due to weather, volcanic activity, and solar flux (osscilations in the amount of energy being released from the Sun). Nevertheless, in the 1970s, scientists began to notice that man-made emissions of CFCs and other chemicals (refrigeration agents, aerosols, and cleaning agents) had caused a significant destruction of ozone letting in more UV radiation. To be blunt, this hypothesis was disregarded due to a lack of evidence. Then in 1985, evidence of large 'ozone hole' was discovered above Antarctica, growing larger and deeper each year. Because the ozone naturally fluctuated due to the Sun, the hole appears annually in springtime. More recently, evidence is emerging about significant depletion over the Arctic. This is quite a danger to society because this hole is much closer to the more populous regions of the Northern Hemisphere. And so, in response to the fears about widespread global ozone depletion, the Montreal Protocol on Substances that Deplete the Ozone Layer (the Montreal Protocol, casually) was implemented in 1987. This was an international treaty legally binding participating developed nations to reduce the use of CFCs and other ozone depleting substances. In 1990 and 1992, amendments to the Protocol brough forward a 'phase out' date for CFCs in developed countries by 1995.

- Sample result(s), finished product:

So the goal is to model situations, as exampled below. Situation 1: Montreal Protocol initated in 1900, solar flux set at 20% of full potential, stratospheric thickness set at 21 km (normal range is 20-30 km), and radiation strength set at an arbitrary value of 6. ----------------------------------------------------- Situation 2: Situation 1 after 789 years. Situation 3: Montreol Protocol never initiated, solar flux set at 20%, stratospheric thickness set at 21 km, and radiation strength set at 6. *AKA: the only change is THE MONTREAL PROTOCOL*------Situation 4: Situation 3 after 789 years.Situation 3: Montreol Protocol never initiated, solar flux set at 20%, stratospheric thickness set at 21 km, and radiation strength set at 6. *AKA: the only change is THE MONTREAL PROTOCOL*------Situation 4: Situation 3 after 789 years.

Poster, (3rd period)

Poster, (3rd period)

- Problem Statement and Method for solving the problem:

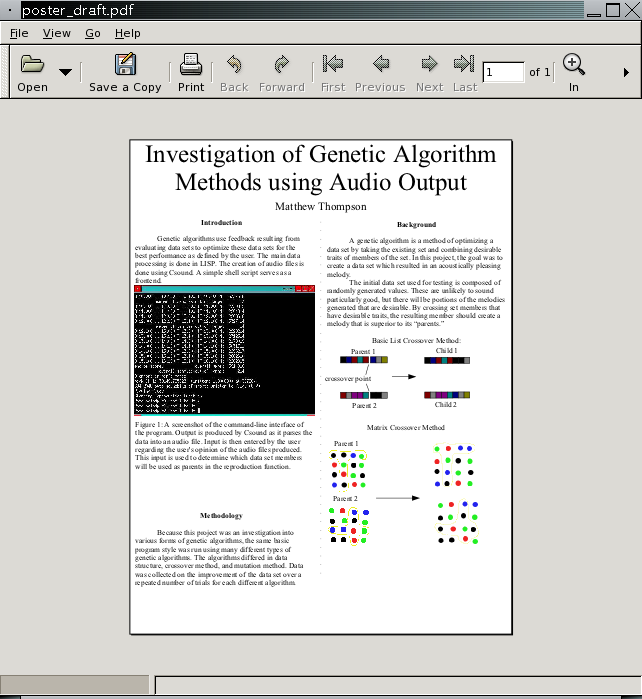

- M. Thompson Investigation of Genetic Algorithms Using Audio Output

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (3rd period)

Poster (3rd period)

- Justin Winkler Space System Modeling: Saturnian Moons

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (3rd Period)

Poster (3rd Period)

- D. Banh, C. Bengston, B. Fleming, K. Gallagher, C. Kobelski, S. Wise

A Student Simulation of a Professional Software Development Group

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (4th Period)

- D. Banh, C. Bengston, B. Fleming, K. Gallagher, C. Kobelski, S. Wise

A Survey of the Various Software Development Cycles

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (4th Period)

Poster (4th Period)

- A. Liu, D. Neitzke, X. Yang

Car Simulation

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (4th Period)

Poster (4th Period)

- K. Silas

The Physical Modeling of a Bowling Ball in Action

- Problem Statement:

- Method for solving the problem:

- Sample result(s), finished product:

Poster (4th Period)

Poster (4th Period)